DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

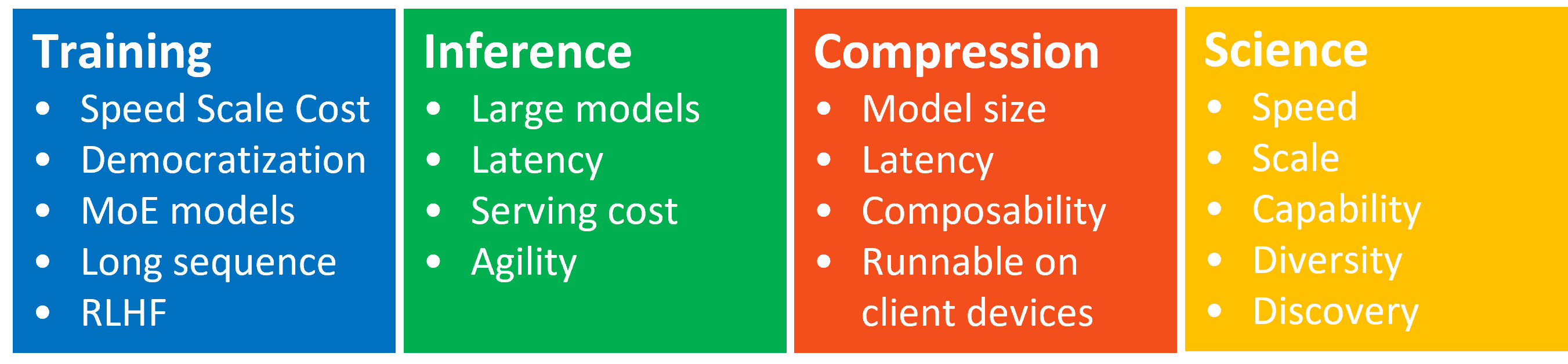

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

Microsoft's Open Sourced a New Library for Extreme Compression of Deep Learning Models | by Jesus Rodriguez | Medium

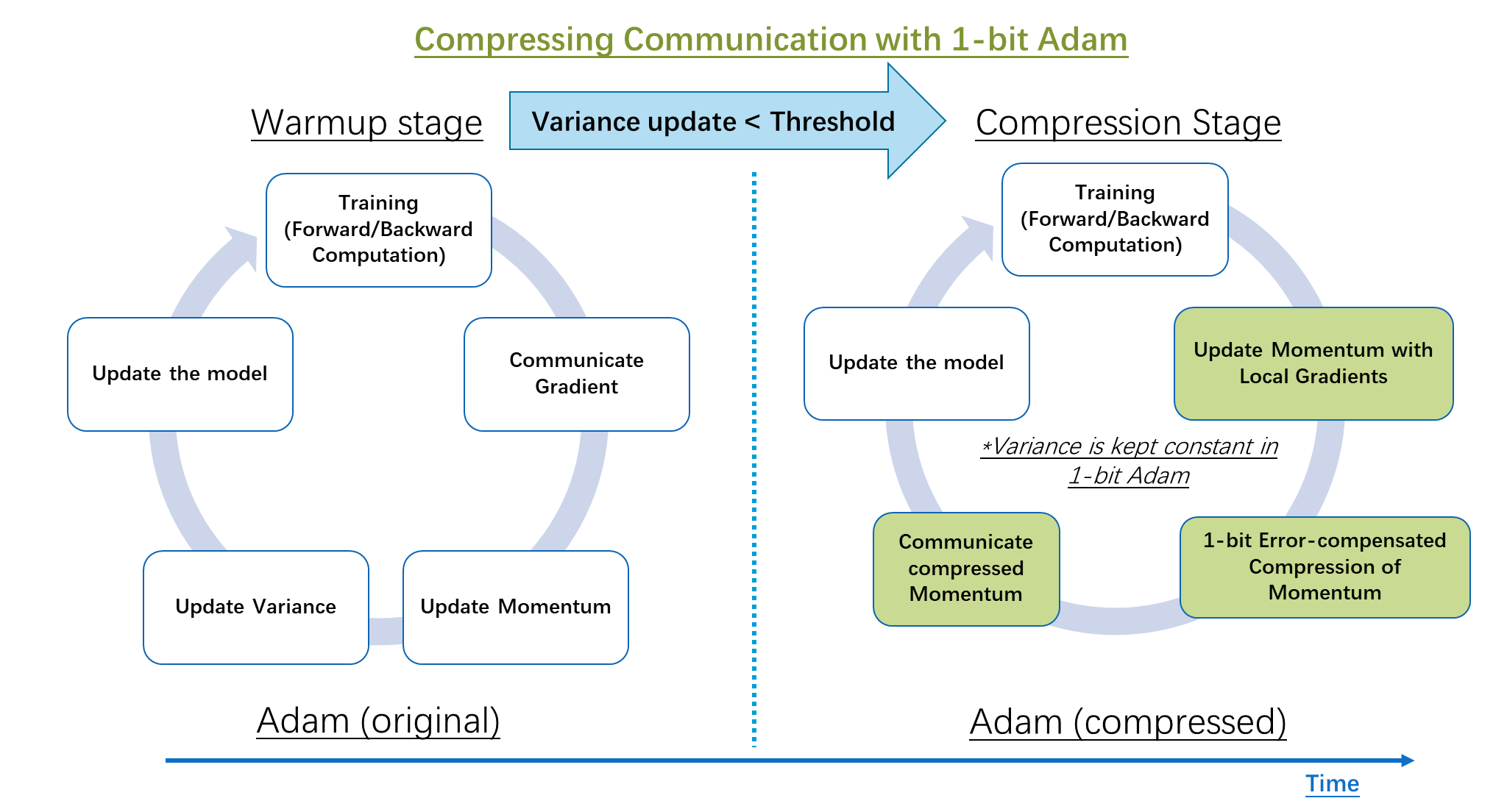

Introduction to scaling Large Model training and inference using DeepSpeed | by mithil shah | Medium

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

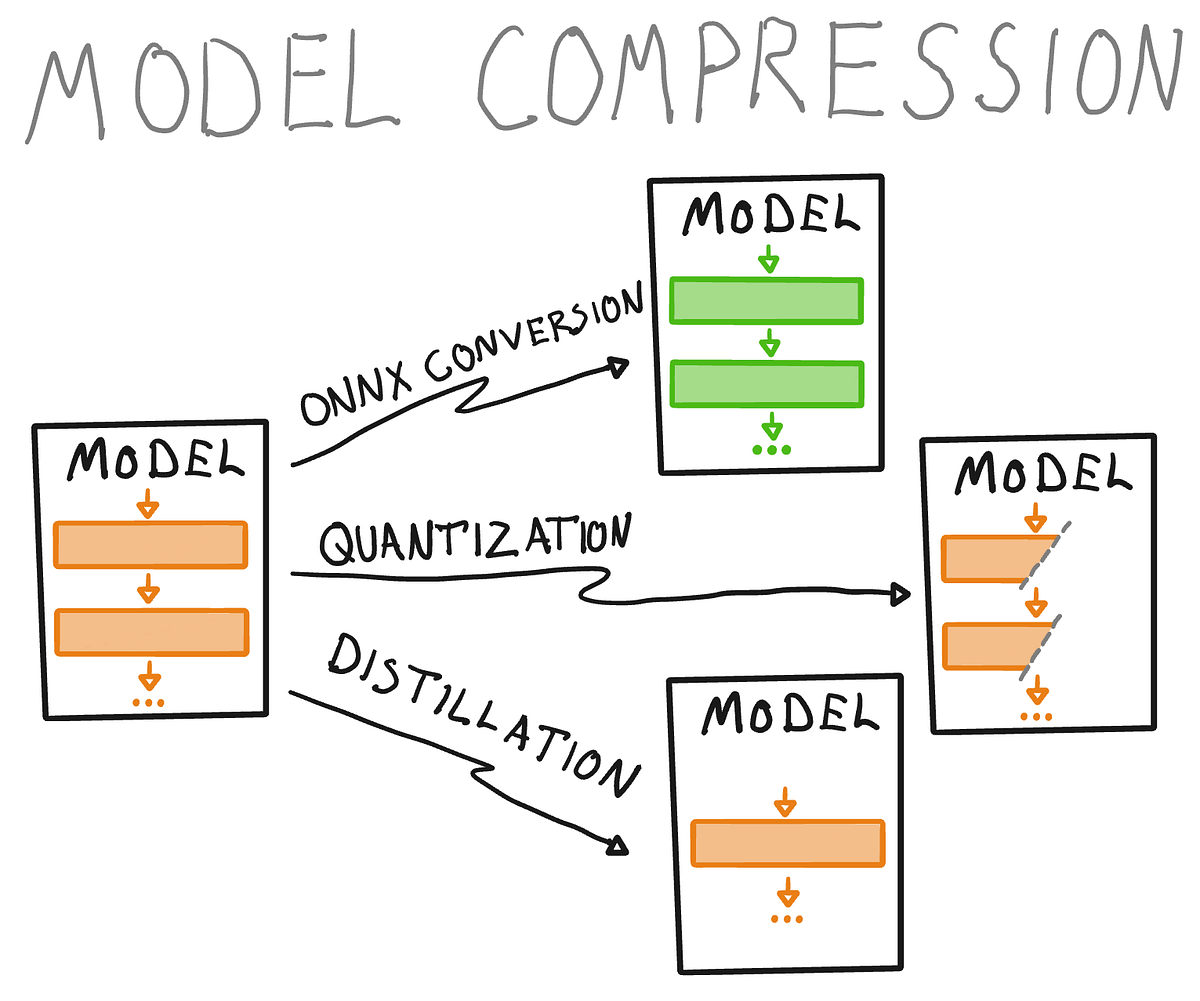

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

Model compression and optimization: Why think bigger when you can think smaller? | by David Williams | Data Science at Microsoft | Medium